Fucking AI.. Thoughts on Neural Networks, Art, and Driverless Culture.

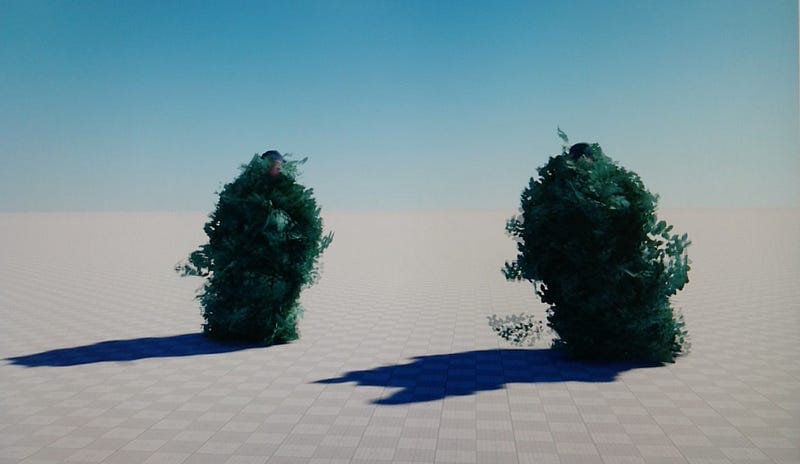

What are the secondary and tertiary implications of the emergence of Machine Learning for the creative community? My friend and long term-collaborator, John Gerard’s LACMA research project: Neural Exchange created a perfect chance to sum up my thinking on the topic.

Thanks to LACMA’s Art + Technology Lab for supporting the project.

Define: a neural network, or machine learning, or artificial intelligence.

These are loose terms for a topic that is a bit like quantum physics. It is a transformative form of computing that allows machines to effectively ‘learn’ from huge databases of information called ‘libraries’ until the software itself can ‘create’ new content. ‘The content’ may mean anything from literally driving a truck across America to composing a Bach-like fugue. The outputs of artificial intelligence are limited by the design of the network and the quality of the training library, but capable of a regression analysis where the neural networks can identify patterns of data that are far beyond the scope of human facilities, leading to outcomes that can seem either eerie or extraordinary. Like a truck that drives itself. For many of us we will understand that they exist but feel life is too short to care. For us neural networks might as well literally be quantum physics: undeniably important, definitely real and mind-numbingly hard to comprehend.

Already we are beginning to see AI’s role in driverless transport. This will revolutionise human infrastructure over the next decade and this will certainly be a very obvious benefit of ‘machine learning’. Just one part of that ‘revolution’ will be reducing the 1.3 million deaths (and 20 million injuries) caused each year (mainly) by human drivers, and for those still having accidents the arrival of driverless diagnosis will be transformative to the medical industry, freeing the art of diagnosis from human bias, exhaustion or simple prejudice. Or, at the very least, providing a pretty impressive second opinion.

This is leading to some unusual academic programs. For example in 2017 a peer-reviewed Stanford article reported 91% accuracy in distinguishing the sexual preference of men using a deep neural network based on facial recognition alone. In other words, it had a 91% gaydar hit-rate. Humans score around 50:50 in the test used — as one might expect in a test where you choose between two faces. The humans can’t tell; the machine can. It has learnt some skill that we cannot divine. We cannot ask what it has learnt, we can only conjecture while the neural network improves on its statistic.

The study also notes that many countries in the world had criminal statutes regarding homosexuality and were actively pursuing this model of law-enforcement.

The laws in many countries criminalize same-gender sexual behavior, and in eight countries — including Iran, Mauritania, Saudi Arabia, and Yemen — it is punishable by death (UN Human Rights Council, 2015)

- Deep neural networks are more accurate than humans at detecting sexual orientation from facial image.

Kosinski & Wang, Sept 2017. https://psyarxiv.com/hv28a/

(nb. the study only used caucasian faces and did not attempt to filter for queer, transgender or bisexual tendency.)

Authors Kosinski & Wang argue that if utilised universally, such technology could result in the legal imprisonment or death of LGBTQ people and therefore the accuracy of such technology is of crucial importance to policy-makers, lawyers, human-rights advocates, and, naturally, to the homosexual community. It is perhaps an example of demonstrating the dangers of opening Pandora’s box by opening the actual box. The point is not that it is a probable apocalyptic scenario, especially given the number of apocalyptic scenarios that appear more likely at the time of writing. However for the queer community it is simply the silent fog-horn of heteronormative biases when it comes to ‘machine-learning’. We are now in a strange place where the potential models of control for the future generations are being developed by a tiny subset of a demographic with singular mindset, low empathic or social skills, and fixed cultural norms. Groups of similar minds building artificial minds to learn from data gathered from a digital global hive mind with all its many prejudices.

At an industry level this academic naivety is echoed with enthusiasm, devolving decision making to models of pattern-recognition that defy analysis or synthesis into human-readable ‘knowledge’. Patterns are based on data that is weighed, considered or analysed by a ‘training process’, looking for the optimal number of variables, avoiding omission bias, as if the existing models of behaviour online were the epitome of human behaviour and intellect. It creates the spectre of a world of knowledge that is algorithmically derived and unreadable. A world based on the injustice, idiocy, and entrenched biases of majority-think, with none of the vision, idealism, unilateral capacity, or romance of the solitary human imagination. Sadly neural networks cannot fix for bigotry. A problem not helped by the sense that there is no place for the humanities in this new world order. Code. Math. Science. Engineering. These hold the keys not only to global economic power but to global culture as well. After all, what are the options? Poets?

There are a number of artists across the centuries whose work has examined both the cyclical and cynical qualities of ‘power’ whether they be celestial, economic or military, but rarely do they stir us to action, they tend to the reflective not the imperative. In a present of algorithmic bias, cyber warfare, and drone surveillance, our artists are often more elegiac than prophetic. For progress we look to industry.

The World Economic Forum lists, among its ten top problems facing the world: Food Security, Social Exclusion, Global Finance, Gender Parity, even the Future of the Internet. Sundar Pichai wrote in Google’s 2016 Founders Letter: “Creating artificial intelligence that can help us in everything from accomplishing our daily tasks and travels, to eventually tackling even bigger challenges like climate change and cancer diagnosis.” So the potential is not insignificant.

Artificial intelligence will, accordingly, be solving cancer, fixing social inequality, or preventing global warming. Currently it is writing screenplays or making music. ‘Humans making culture’ is not in the UN’s list of the world’s top ten problems, neither is determining gay men from straight men. There is no known problem with the creation of ‘art’. We make a lot of art. Some, most, is awful. Some is commercially successful, some critically successful and some is transcendent genius that allows us to see the world in new ways, ‘art’ that changes the way society exists and understands itself. In some ways art is in robust health (whilst chronically underfunded). But underfunding is the least of its worries.

Let’s move for a moment to the secondary and tertiary consequence of our artificial or ‘driverless’ culture. What will MOMA show? And how do our children evolve into artists if there is no economy supporting the early grunt-like ages of an artist — when everything we do is, kind of, bad? Certainly worse than an AI would produce. Who will pay for the bad art? The implications of artificial intelligence disrupting the structure of the creative economy at entry-level is interesting to consider. The endless tide of words, pictures, music, and film currently generated, edited and curated by humans hides the fact that the humans involved learn simultaneously. That process is likely to get automated. We are drifting past headlines like: Google’s art machine just wrote its first song, or ROBO TUNES — This is what music written by AI sounds like or in literature: This AI is really good at writing emo poetry. From the arthouse: This short film was written by a sci-fi-hungry neural network to the multiplex: IBM’s Watson sorted through over 100 film clips to create an algorithmically perfect movie trailer. Considering this is an industry that is not really broken, why ‘fix’ it?

The language used can be unsettlingly anthropomorphic. A reliance on soft phrases like ‘training’ of AI’s, or that art, music, or scripts, were ‘found’ on the internet then ‘fed’ or ‘showed’ to the computer. A human artist certainly goes through this process, and we cannot tell whether the human algorithm invests anything new in the process, perhaps we only ever derive our outputs too? “No such thing as a new idea” said Mark Twain, and every other writer ever according to Google. Yet we intuit that humans can synthesise, and are also pretty sure that a mechanical algorithm can only derive. We’ve finally arrived at a real question that has been a hypothetic sci-fi staple: Can an artefact create?

A new generation of artists will emerge having always worked with machine intelligence, and doubtless to this generation these entities will simply be ’tools’, analogous to a camera, or the light-bulb. For a generation or two these artists will also, necessarily, be computer engineers who have the skills, or who can afford to employ a team, either through patronage or funding. It is not perhaps the most democratic of prospects for culture, but this is progress, or maybe a regression to the Renaissance studio model. It is back at the industrial shop-floor that the implications are perhaps more complicated. In many futures the part being automated is the human input. The neural network simply mimics and reverse-engineers historic human creative processes in order to generate cultural content that is equal or better than human outputs. We might ask what benefit this brings to either artists or society? What are the secondary consequences of ‘libraries’ of culture in which the works of Shakespeare need not be attributed or musicians remunerated because the output is a novel ‘creation’? Even as the educators of our automators we cannot imagine that there is a glowing future for humanity in that industry. Just as for truck drivers. Or for the queer community.

The effort to literally automate the creation of culture is considerable yet presumably not the ultimate goal. It is more likely that cultural output is a convenient playground for the ‘driverless’ future. We are not expecting the tech giants to linger long in the playground, it is a second generation of perhaps less well-intentioned corporations that will likely be watching and quite often consumers who are presented with the bill when it is too late to decline. So we see echoes of the future of algorithmic culture through the spectrum of dancing soldiers, or machine learnt mozart, or cut-up sci-fi. We are painted an algorithmic future not because it is needed but because you can’t hurt anyone with a film trailer, whereas automobiles, drones, emergency rooms and financial services leave a little more space for liability.

And, since we’re inside this circus of trust, we should admit that most of us won’t be able to discern human culture over algorithmic. It will be like picking wine at the restaurant. And if we can’t tell, how can we care? Will we build complicated ‘organic’ guidelines, a kitemark, a taste-test to ensure our culture isn’t just being churned out by a poor, basement-dwelling Chinese supercomputer? It would be nice if humans were cultured enough to say “this is intolerable” but I think we all know we aren’t, and we won’t, at least not until we can no longer recall what we have lost.

nb: this is a ‘personal’ opinion.

I wrote it on a computer, using machine-assisted auto-correct. All the characters were typed by me. In this sequence.